Step 1: Configure AWS Route 53 and Create a Subdomain To establish our website under the Nighthawk Coding Society, we will create a subdomain using AWS Route 53. This allows us to set up a custom domain for our deployed application. Access AWS Route 53: Log in to AWS and navigate to the Route 53 dashboard. Create a Subdomain: Within the hosted zone for Nighthawk Coding Society, add a new A record (or CNAME if applicable) to point the subdomain to our EC2 instance’s public IP.

Step 2: Update Ports and Configure Application for Deployment To ensure our application runs on a unique port, we will change all instances of port 8887 to port 8402 across our project. ### Update Python Backend Modify main.py to run on the new port: ```python if name == “main”: app.run(debug=True, host=”0.0.0.0”, port=8402)

Update Frontend Configuration Change assets/api/config.js to fetch from the new Python URI instead of localhost:

js

const pythonURI = "

http://localhost:8402";

Modify Docker Configuration Dockerfile:

dockerfile

ENV GUNICORN_CMD_ARGS="--workers=1 --bind=0.0.0.0:8402"

EXPOSE 8402

docker-compose.yml:

yaml

- "8402:8402"

Update Nginx Configuration Modify the nginx.conf file to route traffic to the new port:

nginx

proxy_pass

http://localhost:8402

Step 3: AWS EC2 Setup To host our application, we need to set up an EC2 instance on AWS. First, we log in to the AWS Console and navigate to the EC2 dashboard. We select the instance that will host our application, ensuring it is properly configured to allow incoming traffic on port 8402. Security groups should be updated to permit HTTP and HTTPS requests. We also verify that our instance has Docker and Docker Compose installed to run the application containers.

Step 4: Application Deployment Through VSCode Before deploying to AWS, we test our application locally. We check for an available port using docker ps and confirm that port 8402 is free. In the backend terminal, we run make to start the server and replace any references to localhost:8887 with http://127.0.0.1:8402. This ensures the frontend can correctly communicate with the backend through the new port.

Step 5: Deployment in AWS EC2 Terminal Once the local test is successful, we move to deployment on AWS. We SSH into our EC2 instance and clone the project repository. Inside the project directory, we run docker-compose up -d –build to build and start the application in the background. To test the deployment, we replace localhost with our EC2 public IP and verify that the site is accessible on port 8402. Step 6: Route 53 and Nginx Setup

To map our domain to the EC2 instance, we create a new DNS record in AWS Route 53, pointing the subdomain to the public IP of our EC2 instance. Then, we configure Nginx as a reverse proxy to forward traffic to our application. We write a custom Nginx configuration file, update the proxy_pass to http://localhost:8402, and restart Nginx to apply the changes.

Step 7: Certbot Certificate Setup To enable HTTPS, we use Certbot to generate an SSL certificate for our domain. Running sudo certbot –nginx, we automatically configure Nginx to serve the website over HTTPS. We also update the Nginx configuration to redirect all HTTP traffic to HTTPS for security. After confirming the certificate installation, we restart Nginx to apply the changes. Step 8: Code Updates and Version Control Once deployment is complete, any necessary code changes must be carefully managed. We first run git pull to ensure we have the latest version of the code. Any changes are committed with meaningful messages, but commits should not be made directly from the AWS terminal. Locally, we test modifications by running docker-compose up and accessing http://127.0.0.1:8402 in a browser.

Step 9: Synchronizing AWS with Latest Changes To apply new updates in AWS, we SSH into the EC2 instance and navigate to the project directory. We first stop the running containers using docker-compose down, then pull the latest changes using git pull. After ensuring everything is up to date, we rebuild and restart the containers using docker-compose up -d –build.

Step 10: Final Testing and Debugging

After deployment, we thoroughly test the website by checking different features and ensuring all API requests are functioning correctly. If any errors are found, we debug by inspecting logs with docker logs

4.1 Notes (Video 1)

- Computers have evolved over time, becoming smaller and more efficient while using routers and other devices for communication..

- Computers are capable of sending and also recieving data.

- They “talk” to each other using number systems, breaking data into ones and zeros for transmission.

- This use of 0’s and 1’s are part of a language called binary. It is the language that is understood by computers.

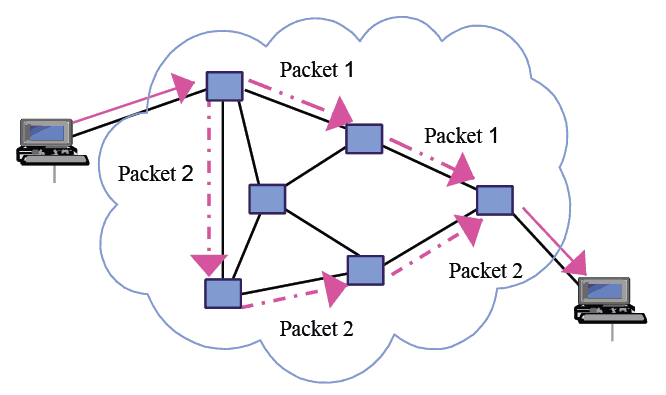

- A packet is a group of numbers that carries information between devices.

- A computer network is a collection of interconnected computing devices that can send and receive data.

- Packet Switching: Messages (files) are divided into packets and sent in any order. The recipient’s device reassembles them upon arrival.

- A path between two computing devices consists of a sequence of directly connected devices, starting at the sender and ending at the receiver.

- Routing is the process of determining the most efficient path for data to travel.

- Computers rely on communication to streamline tasks, with packets facilitating the movement of information in bits.

4.1 Notes (Video 2)

- The Internet Engineering Task Force (IETF) manages internet standards and technical discussions through an open, collaborative process.

- OSI Model (Open Systems Interconnect) defines seven layers of protocols required for network communication.

- TCP (Transmission Control Protocol) establishes a standardized method for sending messages between internet-connected devices.

- Network Access Layer: Handles hardware-related connections, such as network cards or cables.

- Internet Protocol (IP) Layer: Transfers data using packets containing metadata, including IP addresses, to direct information through routers.

- Transport Layer: Uses TCP (which follows a strict three-way handshake) and UDP (a more relaxed protocol) to enforce rules for data transmission.

- Application Layer: Runs programs on internet-connected devices and provides clients with webpages. IP Addresses help direct packets to their intended destinations, allowing routers to manage the flow of information.

4.2 Notes (Video 1)

- Fault tolerance ensures that the internet can function even when failures occur within the system.

- Networks can be physically connected through wiring, and increasing the number of connections improves communication speed.

- Fewer paths increase the likelihood of network failures disconnecting devices.

- Redundancy helps networks remain operational despite errors, enhancing fault tolerance. If one path fails, other devices can still communicate.

- If a single failure disrupts the entire system, the design is flawed. The internet remains reliable due to its fault tolerance.

4.2 Notes (Video 2)

- Networks can scale as more devices are added. Interconnected networks with redundancy adapt to changing routes.

- Data does not follow a single route but instead finds the quickest and most reliable path. Redundancy ensures network reliability, even if a device or connection fails.

- More connections strengthen networks, enabling them to expand and adapt while maintaining efficiency.

- The internet exemplifies fault tolerance, allowing new devices to integrate seamlessly while preventing disruptions.

4.3 Notes

- Computers perform multiple system tasks (which keep the system running) and user tasks (selected by users).

- Tasks are often executed sequentially (A → B → C), meaning one task must finish before the next can begin.

- Parallel Computing divides a program into multiple operations, some of which run simultaneously.

- Parallel computing is hardware-driven, enabling faster processing and optimizing data communication. It is commonly used in gaming.

- The longest-running task in parallel computing determines the total completion time, making it far more efficient than sequential execution.

- Distributed Computing involves multiple computers working together to run a program.

- It integrates both sequential and parallel computing for optimized performance.

Deployment Plan:

My Role (Michelle)- I will be doing the debugging (Troubleshooting in AWS EC2)